Crystal

Crystal is a question answering application which I built as my capstone

project at

PSUT.

She It can parse natural language sentences, recognize word senses

and make logical inferences. It's not domain-specific, so vocabulary coverage

is quite good. Grammar coverage, on the other hand, is fairly basic. Probably

the biggest glaring hole is the complete lack of support for adverbs, although

there are plenty of smaller flaws (numbers, proper support for plurals, etc.).

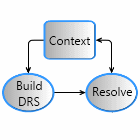

The workflow is pretty simple. The user can tell Crystal facts in the form of

declarative sentences, including conditionals and compound statements, and it

will incrementally build up a context and check every new statement against

it, resolving anaphora (pronouns) and presuppositions (definite

NPs). At any point in the interaction, the

user can ask Crystal subject or object questions which will be answered (if

possible) based on the context.

Implementation

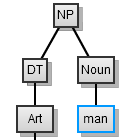

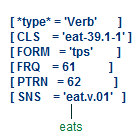

The program is written in Python and uses the

NLTK chart parser with a

VerbNet-based

unification grammar for parsing,

DRT

techniques for converting parse trees into semantic representations, a

vocabulary derived from WordNet®

and VerbNet semantic limitations for word senses, and finally an inference

system powered by the

Prover9/Mace4 theorem

prover and model builder.

When I started working on Crystal, I had never touched

NLP, so the experience has

taught me a lot about parsing, linguistics, semantics and logical inference.

Of course, this also means that the code is riddled with algorithms,

techniques and design decisions that will cause many a

facepalm to most

linguists, NLP practitioners and even software engineers, for which I

apologize in advance. This state of affairs is exacerbated by the fact that

the whole project, from inception to completion took about two months of

part-time work from a single developer whose goals did not include

maintainability.

The performance of the system is pretty abysmal, taking about a second or two

per sentence for simple sentences on a midrange PC, 5-10 seconds for complex

or highly ambiguous sentences and up to several minutes in degenerate cases.

The bottlenecks are mostly fixable, but since the system was never meant to be

more than a proof of concept, I do not plan to ever fix them properly. Memory

usage is similarly ugly, at about 800MB when the full WordNet vocabulary is

loaded. Rewriting the NLTK parser in C/C++ should reduce that by an order of

magnitude.

Sample Sessions

The original project demo included a number of sessions which showcase

particularly interesting features of Crystal. Keep in mind that these were

hand-picked, so while none of them are scripted, they still represent the best

cases. The average experience with Crystal's understanding will not be as

impressive.

Note: Input is in blue, logging in black and output in green.

-

Basic sentence understanding and anaphora resolution.

-

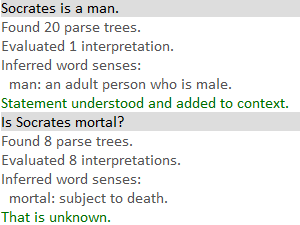

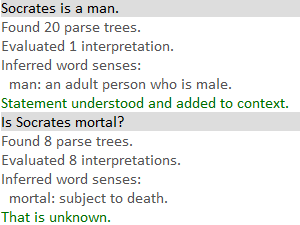

Basic logical inference.

-

Handling of "donkey sentences".

-

Part of speech disambiguation.

-

Sense disambiguation.

-

Presupposition resolution.

-

Various takes on possessives.

Alice and Vincent are waltzing in the hall.

Found 13 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

waltzing: dance a waltz.

hall: an interior passage or corridor onto which rooms open.

Statement understood and added to context.

She is happy.

Found 5 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

happy: enjoying or showing or marked by joy or pleasure.

Statement understood and added to context.

Who is waltzing?

Found 3 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

waltzing: dance a waltz.

Alice and Vincent.

Who is dancing?

Found 12 parse trees.

Evaluated 4 interpretations.

Inferred word senses:

dancing: move in a pattern; usually to musical accompaniment; do or perform a dance.

Alice and Vincent.

Who is happy?

Found 15 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

happy: enjoying or showing or marked by joy or pleasure.

Alice.

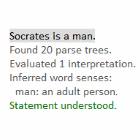

Socrates is a man.

Found 20 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

man: an adult person who is male (as opposed to a woman).

Statement understood and added to context.

Is Socrates mortal?

Found 8 parse trees.

Evaluated 8 interpretations.

Inferred word senses:

mortal: subject to death.

That is unknown.

Every man is mortal.

Found 44 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

man: an adult person who is male (as opposed to a woman).

mortal: subject to death.

Statement understood and added to context.

Is Socrates mortal?

Found 8 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

mortal: subject to death.

Yes.

Ahmad is a farmer and has a mule.

Found 2 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

farmer: a person who operates a farm.

has: have ownership or possession of.

mule: hybrid offspring of a male donkey and a female horse; usually sterile.

Statement understood and added to context.

Whom does Ahmad beat?

Found 15 parse trees.

Evaluated 15 interpretations.

Inferred word senses:

beat: give a beating to; subject to a beating, either as a punishment or as an act of aggression.

No known entities match the query.

If a farmer owns a mule, he beats it.

Found 120 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

farmer: a person who operates a farm.

owns: have ownership or possession of.

mule: hybrid offspring of a male donkey and a female horse; usually sterile.

beats: give a beating to; subject to a beating, either as a punishment or as an act of aggression.

Statement understood and added to context.

Whom does Ahmad beat?

Found 15 parse trees.

Evaluated 2 interpretations.

Inferred word senses:

beat: give a beating to; subject to a beating, either as a punishment or as an act of aggression.

Ahmad's mule.

Many soldiers desert the desert base.

Found 38 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

soldiers: an enlisted man or woman who serves in an army.

desert: leave someone who needs or counts on you; leave in the lurch.

desert: arid land with little or no vegetation.

base: installation from which a military force initiates operations.

Statement understood and added to context.

What do the men desert?

Found 11 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

men: someone who serves in the armed forces; a member of a military force.

desert: leave someone who needs or counts on you; leave in the lurch.

The desert base.

Mia is sitting on a grassy bank.

Found 90 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

sitting: be seated.

grassy: abounding in grass.

bank: sloping land (especially the slope beside a body of water).

Statement understood and added to context.

The bank denied John's loan.

Found 88 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

bank: a financial institution that accepts deposits and channels the money into lending activities.

denied: refuse to recognize or acknowledge.

loan: the temporary provision of money (usually at interest).

Statement understood and added to context.

The blood bank needs donations.

Found 896 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

blood bank: a place for storing whole blood or blood plasma.

needs: require as useful, just, or proper.

donations: a voluntary gift (as of money or service or ideas) made to some worthwhile cause.

Statement understood and added to context.

A cat is chasing a mouse.

Found 56 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

cat: feline mammal usually having thick soft fur and no ability to roar: domestic cats; wildcats.

chasing: go after with the intent to catch.

mouse: any of numerous small rodents typically resembling diminutive rats having pointed

snouts and small ears on elongated bodies with slender usually hairless tails.

Statement understood and added to context.

The rodent is scared.

Found 1 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

rodent: relatively small placental mammals having a single pair of constantly growing incisor teeth specialized for gnawing.

scared: made afraid.

Statement understood and added to context.

What does the cat chase?

Found 18 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

cat: feline mammal usually having thick soft fur and no ability to roar: domestic cats; wildcats.

chase: go after with the intent to catch.

The frightened mouse.

An elephant is a large mammal.

Found 16 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

elephant: five-toed pachyderm.

large: above average in size or number or quantity or magnitude or extent.

mammal: any warm-blooded vertebrate having the skin more or less covered with hair;

young are born alive except for the small subclass of monotremes and nourished with milk.

Statement understood and added to context.

It has a snout and tusks.

Found 3 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

has: have ownership or possession of.

snout: a long projecting or anterior elongation of an animal's head; especially the nose.

tusks: a long pointed tooth specialized for fighting or digging; especially in an elephant or walrus or hog.

Statement understood and added to context.

The ears of the elephant are large.

Found 70 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

ears: the sense organ for hearing and equilibrium.

elephant: five-toed pachyderm.

large: above average in size or number or quantity or magnitude or extent.

Statement understood and added to context.

The animal's hide is grey.

Found 16 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

animal: a living organism characterized by voluntary movement.

hide: body covering of a living animal.

grey: of an achromatic color of any lightness intermediate between the extremes of white and black.

Statement understood and added to context.

What does the elephant have?

Found 2 parse trees.

Evaluated 1 interpretation.

Inferred word senses:

elephant: five-toed pachyderm.

have: have ownership or possession of.

The grey hide, the snout, the large ears and the tusks.

License

Due to library license requirements, this project is licensed under the GNU GPL.

Download

-

Documentation:

This is a 25 page long PDF report detailing the implementation of Crystal.

A good place to start if you are interested in the algorithms behind the

project or want to tinker with the code.

-

GitHub Repository:

This can be checked out using git or downloaded as an archive. The included

README.md file explains how to run the program. Note that compiled binaries

of Prover9 and Mace4 for Windows and Unix are checked into the repository as

upstream versions are outdated.

Screenshots

Crystal is a question answering application which I built as my capstone

project at PSUT.

Crystal is a question answering application which I built as my capstone

project at PSUT.